Self-Distillation Bridges Distribution Gap in Language Model Fine-Tuning

第一作者:Zhaorui Yang

作者单位:浙江大学

发表时间:2024/5

发表期刊:ACL 2024

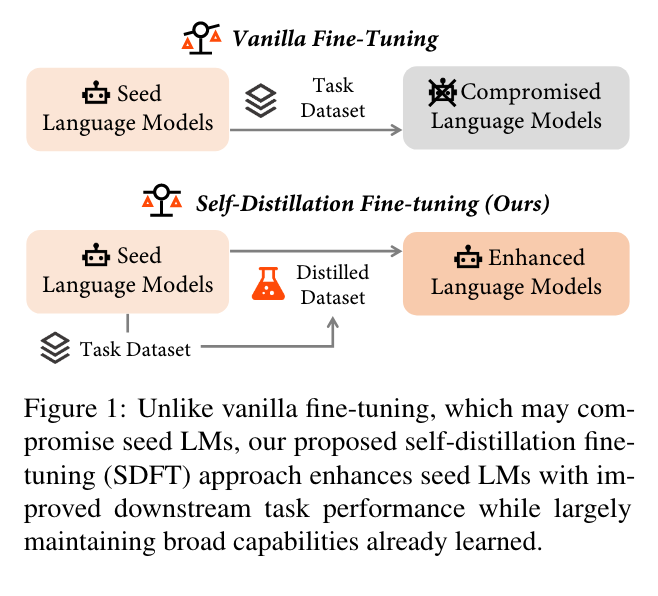

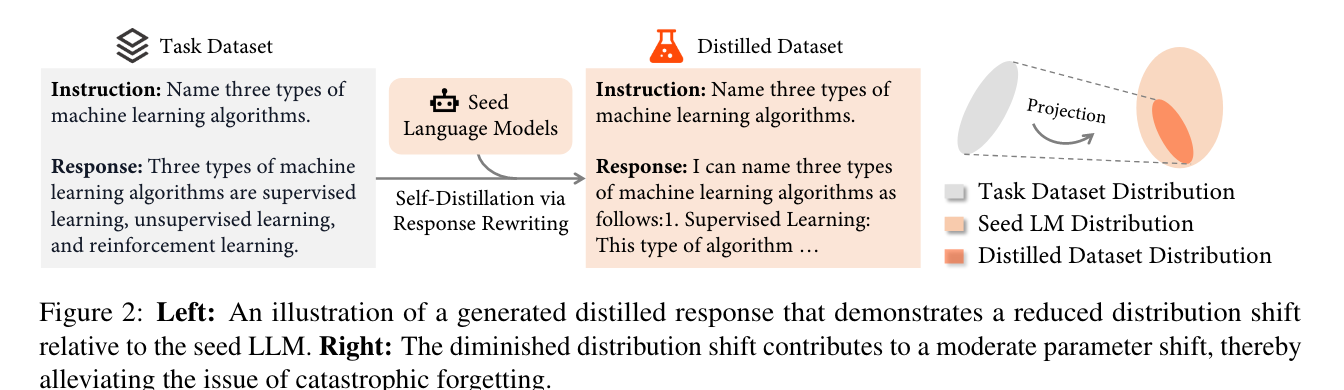

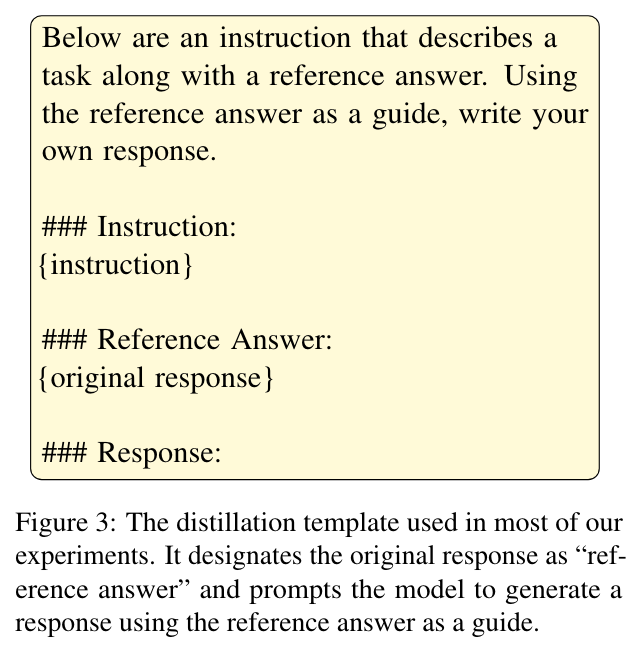

关键内容:Self-Distillation Fine-Tuning (SDFT),用模型生成的数据来对模型进行训练,弥补数据集与LLM分布的不同而导致微调带来的灾难性遗忘的问题。

参考文献:Yang Z, Liu Q, Pang T, et al. Self-Distillation Bridges Distribution Gap in Language Model Fine-Tuning[J]. arXiv preprint arXiv:2402.13669, 2024.

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.